In today’s rapidly developing artificial intelligence landscape, a major breakthrough has quietly emerged – Chinese AI company DeepSeek has released their fully open-source DeepSeek R1 model, which rivals OpenAI’s o1 in performance but costs only 3% in comparison. This breakthrough not only makes high-performance AI more accessible but also opens up new possibilities for the entire AI industry.

Why is DeepSeek R1 So Important?

1. Perfect Balance of Performance and Cost

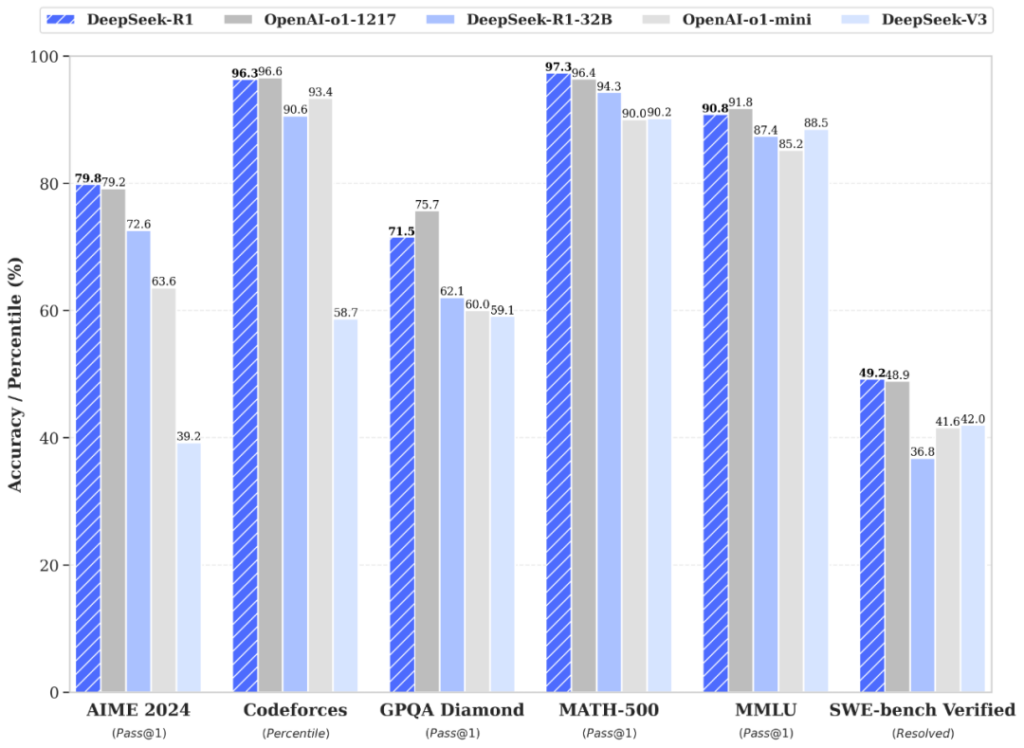

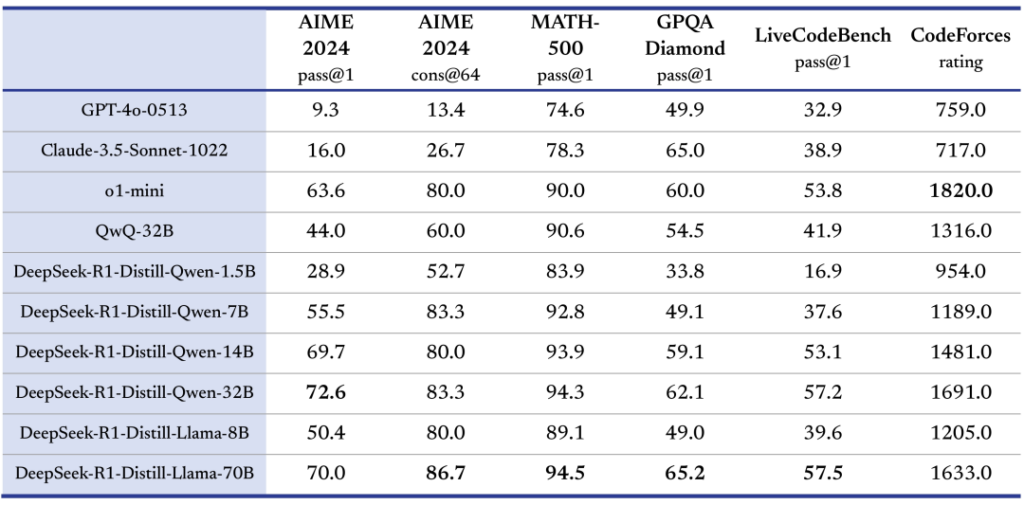

- Mathematical ability: Achieved a 79.8% pass rate in AIME 2024 test, surpassing OpenAI o1

- Programming capability: Outperforms 96.3% of human programmers on Codeforces platform

- General knowledge: Achieves 90.8% accuracy in MMLU tests

2. Revolutionary Pricing Strategy

Compared to OpenAI o1’s price of $60 per million tokens, DeepSeek R1 only requires $2.2 for the same task. This means:

- Enterprise annual AI usage costs can be reduced from tens of thousands to hundreds of dollars

- Individual developers can now afford high-performance AI services

Cost Comparison Between DeepSeek R1 and OpenAI O1

| Price per million tokens | Input Price | Output Price |

|---|---|---|

| OpenAI O1 | $30 | $60 |

| DeepSeek R1 | $1.1 | $2.2 |

| Savings Percentage | 96.3% | 96.3% |

Practical Value Analysis

1. A Blessing for Individual Users

Most excitingly, ordinary users can now run AI models approaching o1’s level on their own computers:

- Mac users can deploy through simple steps

- Supports regular computers with less than 32GB memory

- No need to pay expensive API fees

2. Enterprise Applications

For business users, DeepSeek R1 offers several attractive features:

- Significantly reduced code security analysis costs

- Supports private deployment

- MIT license ensures freedom for commercial use

Technical Innovation Highlights

DeepSeek R1 incorporates several important technical innovations:

- Perfect Combination of Open Source and Performance

- Achieved 79.8% pass rate in AIME 2024 test

- Outperforms 96.3% of human programmers on Codeforces platform

- Achieves 90.8% accuracy in MMLU tests

- Flexible Deployment Options

- Supports local deployment and operation

- Compatible with computers having less than 32GB memory

- Supports multiple operating systems including Mac

- Provides private deployment solutions

how ot use DeepSeek R1

To start using DeepSeek R1, follow these steps:

- Online Access

- Visit the official website directly: https://chat.deepseek.com/

- Register for free to start using

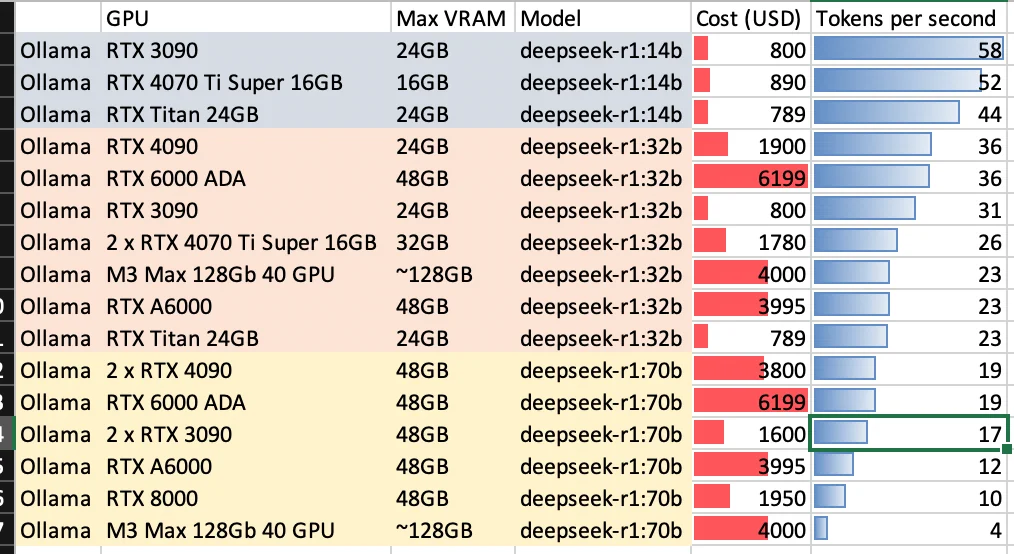

- Quick Local Deployment

Download and install Ollama: Visit https://ollama.com/download

Run suitable model version:

# 1.5B version (smallest)

ollama run deepseek-r1:1.5b

# 8B version (recommended for beginners)

ollama run deepseek-r1:8b

# 14B/32B/70B versions (requires stronger GPU)

ollama run deepseek-r1:14b

ollama run deepseek-r1:32b

ollama run deepseek-r1:70bUsing Chatbox client (optional):

- Download and install https://chatboxai.app/

- Select Ollama as model provider in settings

- Set API address to http://127.0.0.1:11434

- Select installed model to start conversation

Reference: https://www.reddit.com/r/ollama/comments/1i6gmgq/got_deepseek_r1_running_locally_full_setup_guide/

Running speed and memory requirements on different GPUs

source:https://www.reddit.com/r/LocalLLaMA/comments/1i69dhz/deepseek_r1_ollama_hardware_benchmark_for_localllm/

Conclusion

The release of DeepSeek R1 not only brings technological breakthroughs but more importantly, it ushers in a new era of AI democratization. It proves that high-performance AI doesn’t have to be expensive proprietary technology but can become a productivity tool accessible to everyone. Whether individual developers or enterprise users, everyone can leverage this open-source model to gain more opportunities in the AI era.